The rise of digital tools in diabetes care is reshaping how individuals manage chronic conditions, offering new levels of personalization, access, and autonomy. Joe Kiani, founder of Masimo and Willow Laboratories, has been a vocal advocate for pairing innovation with accountability, especially as health platforms grow more integrated into daily decision-making. While AI-powered apps and real-time wearables open the door to earlier interventions and stronger user engagement, the pace of change has outstripped existing safeguards. The result is a growing need for clearer standards that protect users while supporting the continued evolution of digital care.

The stakes are growing, and thoughtful oversight is essential to protect users and preserve trust. As digital platforms increasingly influence how care is delivered and how individuals interpret their health, regulatory clarity and ethical design have become urgent priorities. Ensuring that these tools support safe, private, and equitable care will shape the future of diabetes management.

The Current Regulatory Landscape

Digital diabetes tools straddle the intersection of consumer wellness and clinical medicine, creating unique regulatory challenges. While traditional medical devices are subject to rigorous FDA oversight, many health apps and wearables fall into gray areas.

The FDA classifies digital health tools based on risk. High-risk technologies, such as insulin delivery systems or diagnostic software, typically undergo full regulatory review. However, lower-risk tools, like wellness apps that offer lifestyle tips, may not be reviewed at all. This inconsistency raises concerns about safety, especially when apps make medical claims or influence clinical decisions.

The pace of innovation compounds the challenge. Regulatory frameworks often include technological advancement, which means that many digital health solutions enter the market without robust validation or clarity on compliance expectations.

Balancing Innovation and Oversight

Navigating regulations while pushing boundaries can be difficult for innovators. It is not enough to create new tools. Developers must also anticipate the risks their platforms might pose. Those who deliver data-driven guidance need to be transparent about how their AI models function. How is user data collected and used? What safeguards are in place to prevent harm? Are users monitored with consent and informed about potential risks? These are the kinds of questions regulators must be ready to confront.

The goal is not to slow innovation but to shape it responsibly. Clear guidance helps developers avoid missteps and reinforces public trust. Companies that engage thoughtfully with oversight are more likely to deliver meaningful, lasting impact.

One example is Nutu™, Willow Laboratories’ first digital health application for a continuum of care. It offers real-time insights and behavior support grounded in science and designed for daily life. By prioritizing usability and responsible data practices, the platform demonstrates how innovation can align with ethical standards.

Joe Kiani, Masimo founder, says, “Our goal with Nutu is to put the power of health back into people’s hands by offering real-time, science-backed insights that make change not just possible but achievable.” This approach highlights the importance of designing digital health platforms that are grounded in evidence, transparent in their methods, and accountable to the people they serve.

Data Privacy and Security Concerns

As digital platforms collect vast amounts of personal health data, privacy becomes a critical issue. Users must know who has access to their information and how it is being stored, analyzed, and shared.

While laws like HIPAA in the U.S. and GDPR in Europe provide some protections, many health tech tools fall outside their scope. Consumer health apps not integrated into healthcare systems may not be bound by the same rules, creating gaps in oversight.

Startups must adopt robust security protocols and clear privacy policies. It includes encryption, anonymization, and user control over data sharing. Transparency around data practices builds trust and ensures users feel safe engaging with the technology.

Bias and Fairness in AI Models

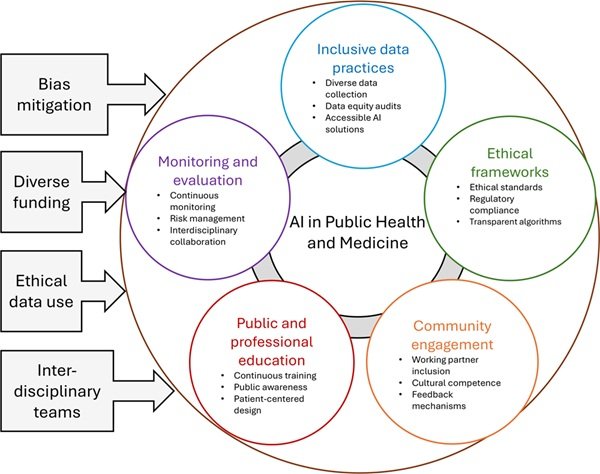

AI-driven tools offer powerful potential for personalized care, but they also carry risks of bias. If algorithms are trained on non-representative datasets, they may offer less accurate or useful guidance to certain groups.

For example, an app trained primarily on data from middle-income white patients might overlook risk patterns unique to Black, Indigenous, or low-income users. It could result in misdiagnoses or ineffective recommendations.

To prevent this, companies must use diverse datasets and regularly audit their algorithms for bias. Inclusion must be part of the design process, not an afterthought. Developers should also offer transparency about how their models work and invite peer review.

Ethical Use of Behavior Modification

Digital health platforms often rely on behavioral nudges to influence user decisions. These can be helpful when used responsibly, prompting users to drink water, exercise, or check their glucose levels. But they can also be intrusive or manipulative.

Users must retain autonomy over their health choices. Nudges should be evidence-based, non-coercive, and adjustable according to user preferences. The goal is to support, not pressure, users into healthier behavior.

Platforms must be mindful of the psychological effects of constant health monitoring. Overemphasis on metrics can lead to anxiety or disordered thinking about food, exercise, or wellness.

The Role of Policy and Collaboration

Addressing these regulatory and ethical issues requires more than individual company efforts. It demands collaboration among technology firms, healthcare providers, academic institutions, and policymakers.

Governments must update regulations to match the pace of digital innovation. It includes clearer guidelines for AI use, improved enforcement of data privacy, and incentives for ethical design. Meanwhile, health systems must work with startups to validate tools and ensure they integrate effectively into patient care.

Educational initiatives are also needed to help users understand how digital health tools work, their rights, and how to use these platforms safely and effectively.

A Framework for the Future

As digital diabetes tools become more widespread, the need for thoughtful regulation and ethical stewardship grows. These technologies can empower users, improve outcomes, and lower costs, but only if they are developed and implemented with care.

Setting a standard for responsible innovation means prioritizing science, usability, and user empowerment. These principles provide a blueprint for how digital health tools can meaningfully support long-term well-being.

The healthcare system is on the cusp of digital transformation. Ensuring that this transformation is equitable, transparent, and accountable will determine whether it truly serves the public good. Ethical digital health is not just about what we can do. It’s about what we should do.